How to scrape data from a website (using AI) in 2026

Learning how to scrape data from a website will make you feel like you have superpowers.

I started off learning how to use Python to scrape websites, as it was the main tool everyone told me to use. But now that we have LLMs and no-code AI tools, I had to find a new, easier way to scrape things quickly.

And after testing multiple tools and workflows for months, I finally figured it out.

I’m going to show you how to scrape any website in a way that’s so easy you’re going to be shocked how quickly you’ll be able to do it yourself after reading this article.

I'll show you the exact process I use to scrape any website, the tool I recommend (and why), and a real example where I scrape Reddit to uncover content ideas and startup opportunities. But you’ll also learn how to create any custom flow that is designed for you.

By the end, you'll know how to extract data from any site and automate it to run whenever you need it.

I’m really excited to show you this, so let's just get right into it.

Can you web scrape for free?

Yes, you can scrape data from a website for free. There are a bunch of free tools that can help you start extracting data, however you're most likely going to need to pay as your usage increases.

If you're just doing one-off scrapes or experiments or you're just tinkering with things, then it should generally be free (as I'll show in this article). Where it can cost money, and get expensive, is when you need to integrate different APIs and scrape a bunch of web pages at scale or on a recurring basis.

To keep it short, yes you can scrape websites for free if you're doing small experiments. But you'll most likely need to pay if you're scraping large datasets or have complex use cases.

Can I scrape a website without coding?

Yes, you do not need to know how to code in order to scrape data from a website. Typical web scraping involves using Python or JavaScript, but now with no-code and low-code AI tools, any non-technical person can easily scrape website data.

The workflow I'm going to show below can be done by anyone, technical or not. This way you'll walk away from this tutorial knowing exactly how to extract any form of data from a website whether you're doing one-off experiments or a large ongoing project.

Okay, let's dive in.

How to scrape data from any website in 4 steps

Here are the steps to scraping data from a website:

- Choose what data you want to scrape

- Pick a web scraping tool

- Create your workflow with target URL

- Run the scraper and export your data

Let’s go over each one.

1. Choose what data you want to scrape

The first step is to figure out what type of data you want to scrape and from where you want to scrape it. I know this is pretty self-explanatory, but I think it's important to build out your plan before you start using a method of web scraping.

Maybe you want to scrape profile information from LinkedIn, or you want to scrape a database of URLs to enrich data on specific prospects. Or maybe, you want to scrape a subreddit on Reddit to uncover problems people are having (for content ideas you can use) — I do this one a lot.

Whatever the case may be, the first step is to understand if you're going to be scraping one URL or multiple.

Here are some things to consider before you start:

- What specific data points do you need? (names, emails, prices, reviews, job titles, etc.)

- Is it a one-time scrape or recurring? (daily price monitoring vs. one-off research project)

- How much data are you pulling? (a few dozen items or thousands of pages)

- What format do you need it in? (Google Sheets, CSV, JSON, or directly into your CRM)

- Is the data structured or messy? (clean tables vs. scattered across multiple page elements)

Understanding these details will allow you to pick a tool that can serve both use cases. For every web scraping tool, even if you use a Chrome extension, you will be able to scrape a single URL for any data.

But what takes it a step further is once you're able to use a tool that can take in a Google Sheet with a list of URLs and automatically use AI (with a premium LLM model of your choice) to pull the right information.

I’m going to assume, at this point, you already have an idea of what you want to scrape. I also have one in mind currently that I will build out in this article.

Whatever the case may be, the next step is to find the right tool to help you do it.

2. Pick a web scraping tool

There are a lot of web scraping tools out there. There are OG tools like Octoparse and Scrapy, all the way to newer AI-powered no-code tools like n8n or Gumloop.

If I were picking a tool today, especially because it's more important than ever to become AI proficient, I would pick an AI web scraping tool over a traditional tool that simply parses HTML on a page.

Traditional scrapers work fine if you're pulling structured data from a single page with clean tables. But they break easily when a website changes its layout, and they require knowledge of programming languages like Python to handle complex workflows.

AI-powered scrapers are different in that they can adapt to changes, understand context, and extract data in real-time without needing you to manually map every field. This is especially true of automation tools that allow you to build AI agents. Instead of writing functions or dealing with messy HTML, you just tell the bot what you want and it can figure out the rest on its own.

Why I recommend Gumloop for web scraping

I've tested a bunch of different scraping tools, and Gumloop is the one I keep coming back to. Mostly because it has:

- A built-in AI assistant (Gummie) that helps you build any web scraping workflow simplify by you explaining what you want to do in natural language

- Works with any LLM model like ChatGPT, Claude, Gemini, Llama or whatever model is best for your use case, all without needing extra API keys

- It has a clean UI/UX that is actually fun to use, which matters when you're building complex automation workflows

- Handles both single and bulk scrapes so you can scrape one URL or upload a CSV file with thousands of URLs and let it rip

- AI-powered data extraction that doesn’t need you to manually map fields (unless you want to), the AI understands what you're asking for and pulls the right data

- Export options that push your scraped data directly into Google Sheets, CSV files, or other tools in your tech stack

The big difference between Gumloop and traditional scrapers is that it combines automation with AI agents. So when you're extracting data, you're actually building workflows that can scrape, process, and route information wherever you need it to go. And you can easily share these workflows with other members of your team as well.

Okay, if you want to jump right into the tool yourself, you can follow this guided tutorial to build your scraping workflow.

Otherwise, I’m going to build out my own workflow where I’m going to scrape Reddit to uncover both content ideas and startup ideas I can build.

3. Create your workflow with target URL

Gumloop has a bunch of web scraping templates you can use. But you don’t even need templates to be honest.

Gummie, the in-product AI assistant can build any workflow for you simply by you prompting it. Literally just like you would when talking to ChatGPT.

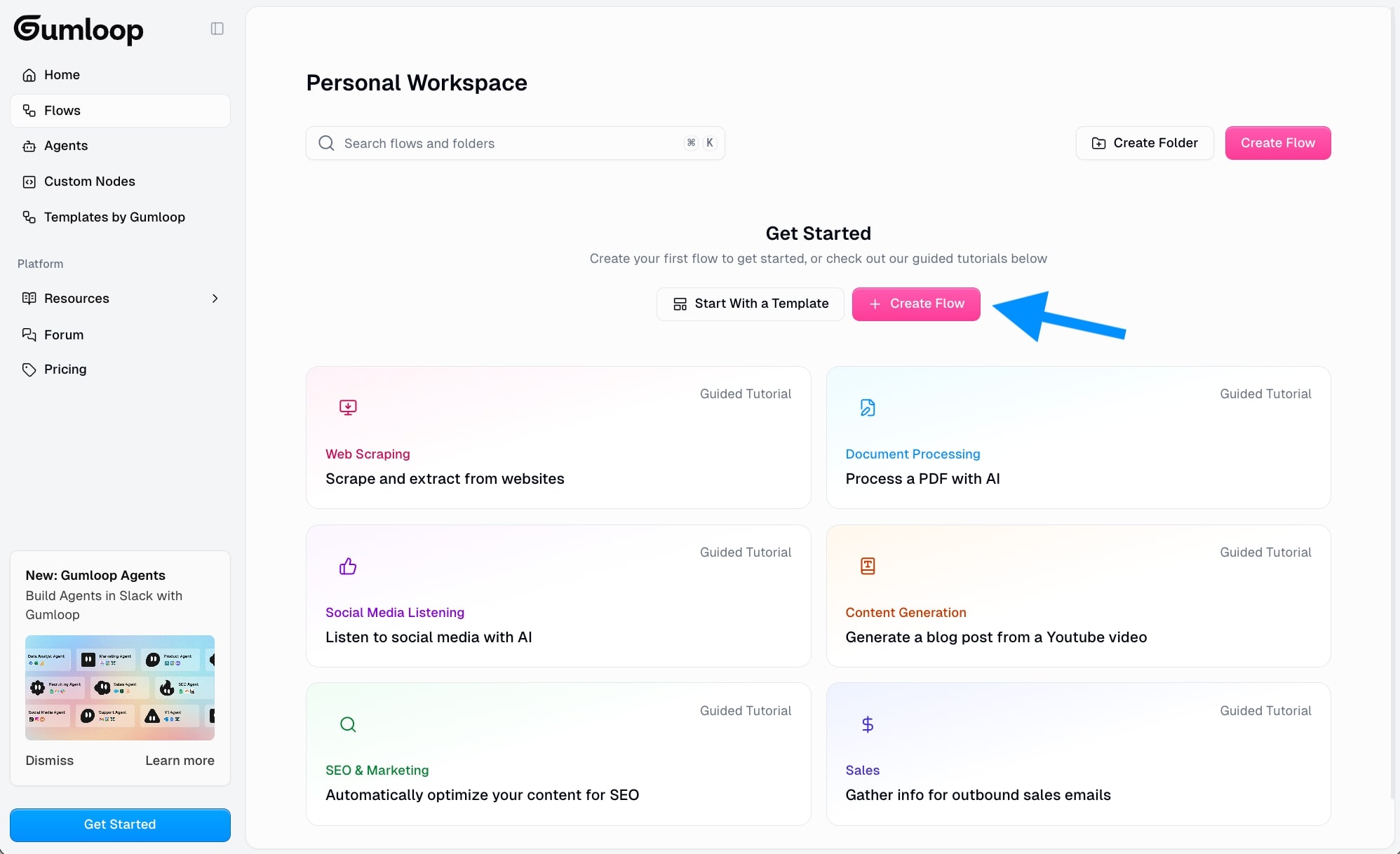

The first step is to open up the Flows workflow and tell Gummie what you want to do. You don’t even need to sign up for an account at this point, so you can follow me along. Just go to this link and click 'Create Flow'.

From here, you’ll be sent to the Gummie chatbot and you can describe exactly what you want to scrape. You can also start with any tigger or integration, so you can manually build first if you don’t want to use Gummie.

But, I would highly recommend you start with Gummie if you’re not familiar with automation tools. Gummie will help walk you through the build process and you can always edit and rework things as you build.

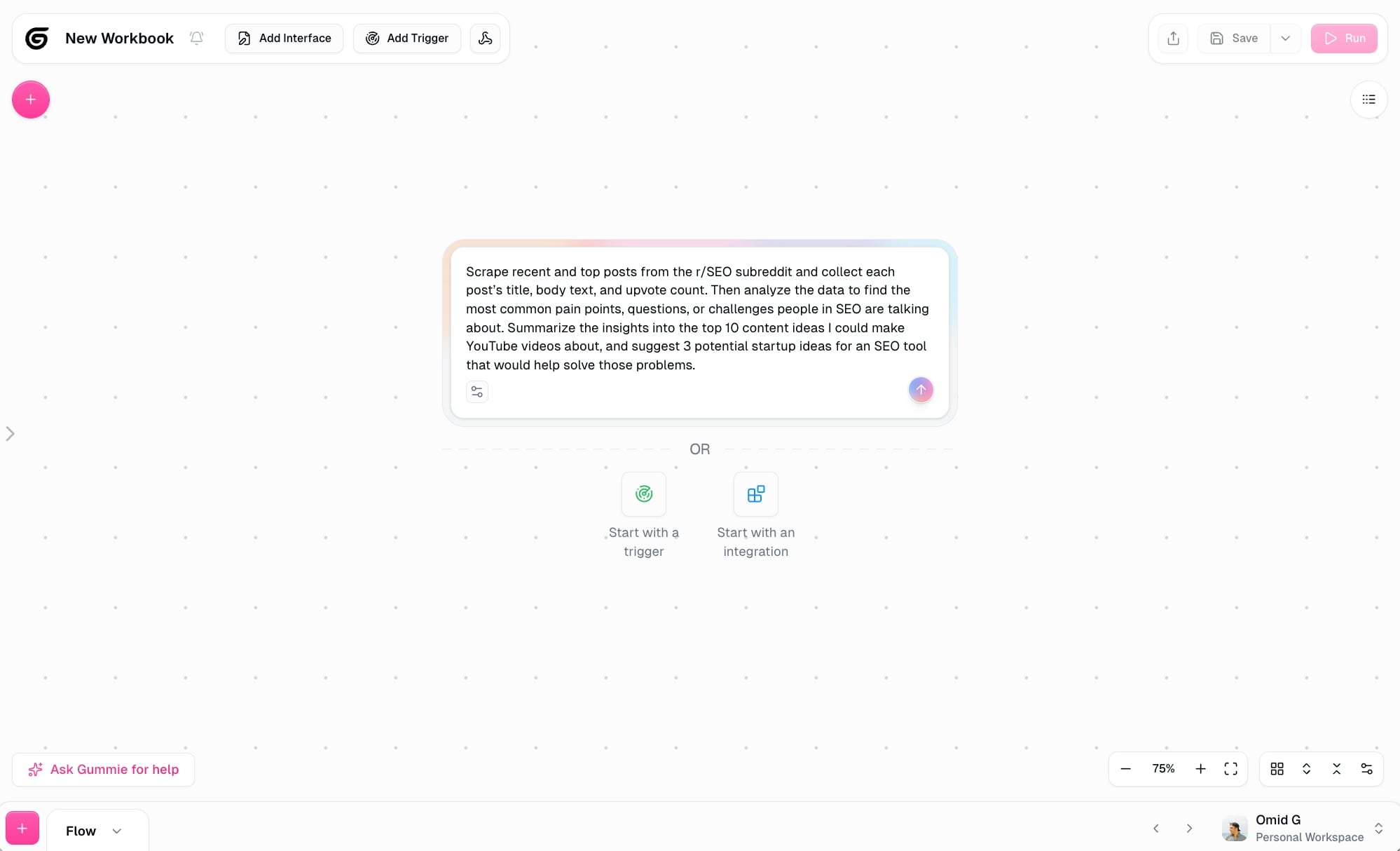

I’m going to start with Gummie and use this prompt:

Scrape recent and top posts from the r/SEO subreddit and collect each post’s title, body text, and upvote count. Then analyze the data to find the most common pain points, questions, or challenges people in SEO are talking about. Summarize the insights into the top 10 content ideas I could make YouTube videos about, and suggest 3 potential startup ideas for an SEO tool that would help solve those problems.

Hit enter, and give it a few seconds while the AI start to plan out your build. Gummie will start to add “nodes” onto the canvas to start building your customized flow. A node is essentially a tool that is used in a workflow — this is generally an integration or AI model. (Yes, I just used an em dash — been using them before AI ruined it.)

As you can see, the first node Gummie added was the Reddit node (because I asked it to scrape reddit). If you tell it to scrape a specific website, it will give you the web scraping node designed to scrape any URL you give it.

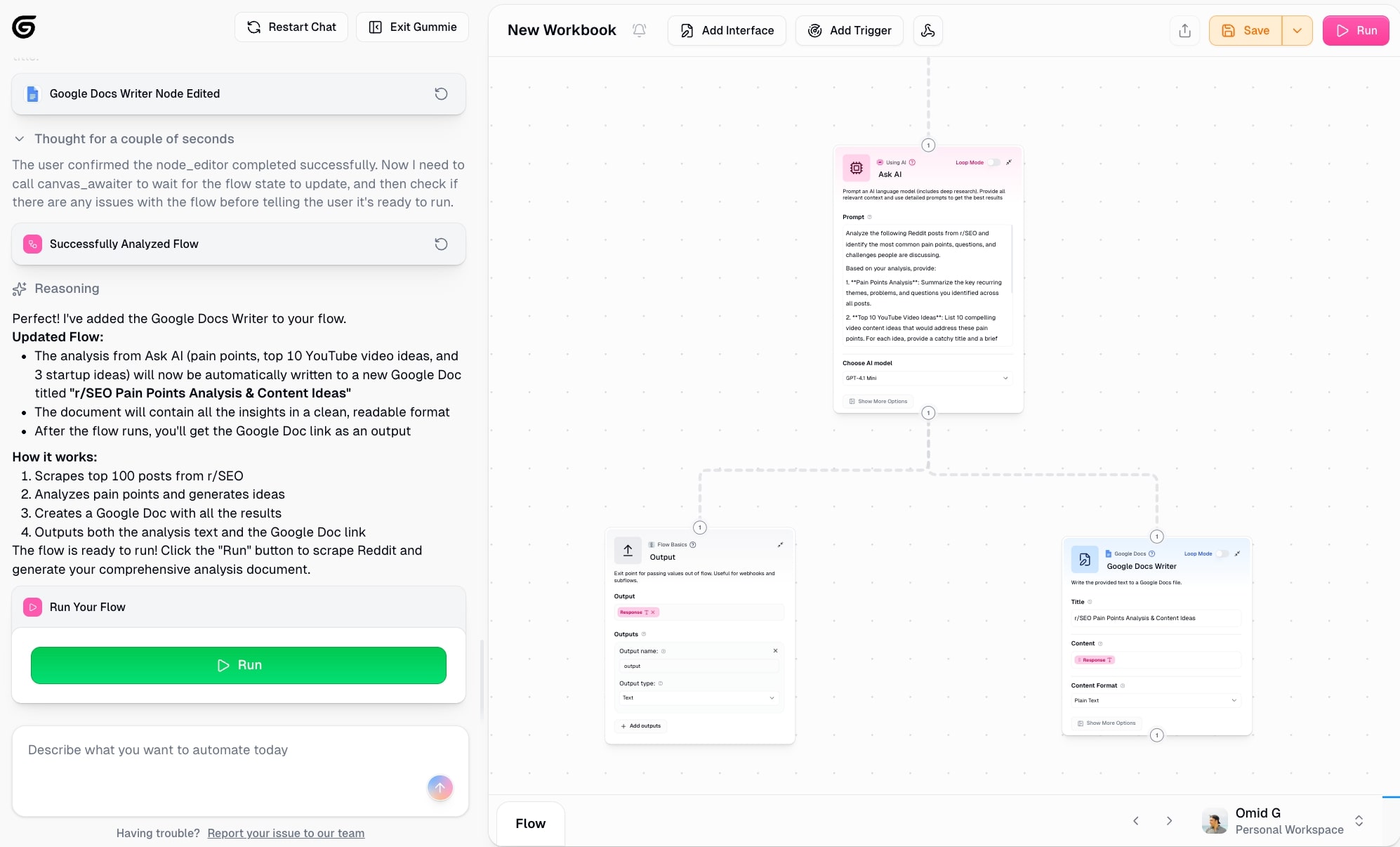

Gummie will start to add on more nodes. As you can see in the screenshot above, the building canvas is on the right and the Gummie chatbot is on the left. Gummie made a plan on what nodes this entire workflow needs, and how they should be connected to each other.

After a couple minutes, depending on the complexity of your initial prompt, you will have a completed workflow.

As you can see, this workflow consists of:

- Reddit node: This scrapes any subreddit

- Combine text: This takes both the title and content of a Reddit thread

- Join List Items: This takes all that context so we can feed in into AI

- Ask AI: This is any LLM model with a specific prompt on what to do with the Reddit data that is scraped

- Output: This then gives us the output of our request (content and startup ideas)

We could also add nodes to send this output to a Google Sheets, Doc, Slack message, email, or really any place. The current setup will simply just output the ideas.

But, let’s actually modify this.

I want to get a full analysis of the top pain points in this subreddit, along with the content and startup ideas. The current setup just summarizes the pain points, but i also want to know what those pain points are myself.

Here is a basic prompt I’m using to add this:

As you can see, it started working on the update right away!

Now, it also added the Google Docs Writer node:

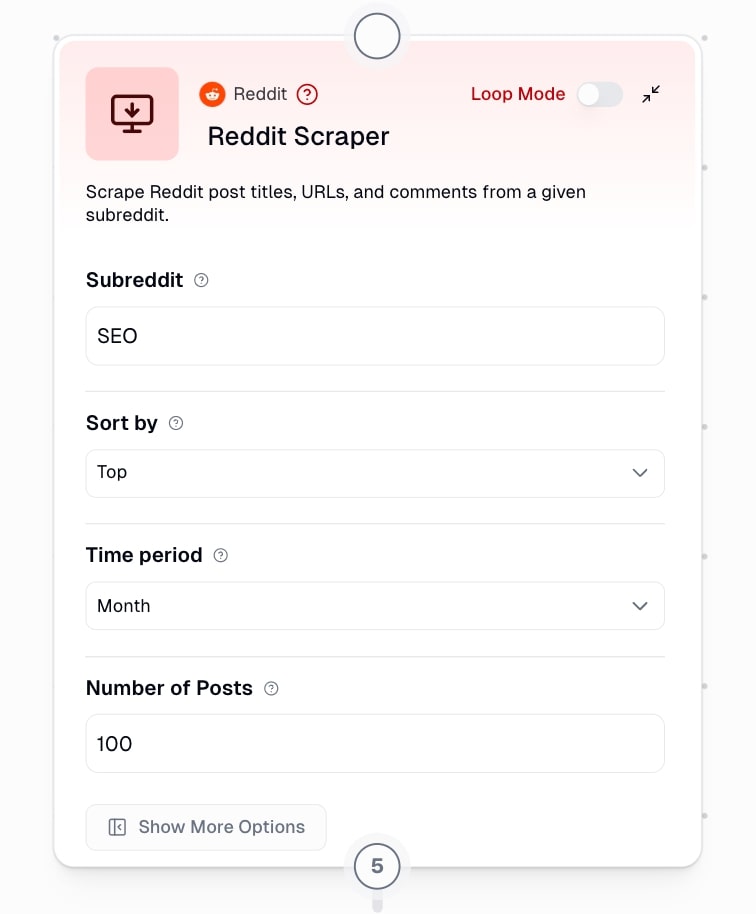

Before I click run, I want to double check the Reddit and AI nodes. For Reddit, I want to make sire the Subreddit is correct. I also want to make sure that I’m scraping the top posts within the past year. This will give me a good timeline. As you can see from the screenshot below, the default mode was pretty spot on, I just changed the time period (after I took the screenshot).

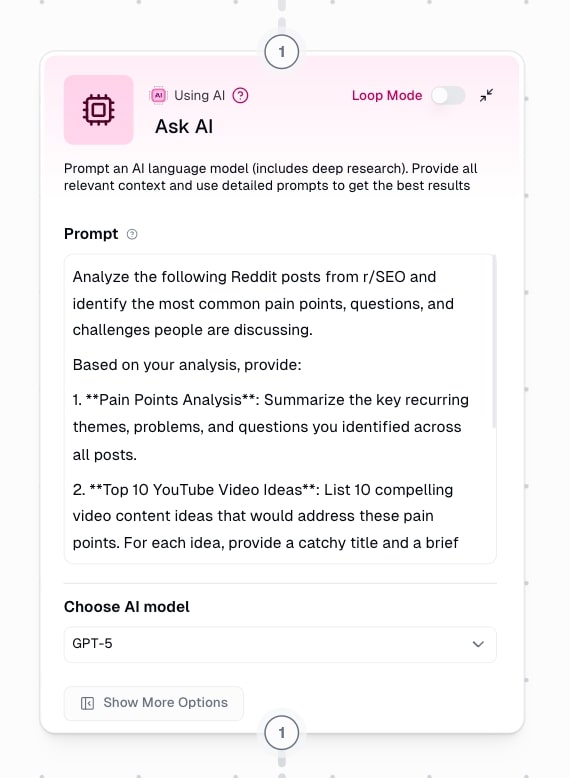

Next, I want to look at the AI node. This is where we set what our prompt is, and also what LLM model we want to use.

The prompt Gummie gave the AI to execute on is actually really good as is. I will get 10 YouTube video ideas from the common pain points people have in this Subreddit, and I’ll also get 3 tool ideas.

I kept the prompt as is. The only thing I changed was the AI model. In this case, I chose GPT-5 as I think it’s the best. It might be overkill for this analysis, and use up more credits than a different model, but what can, I say I like the best.

Okay, now we are good to go to step four.

4. Run the scraper and export your data

If everything went smooth in step 3, all we have to do now is run our flow.

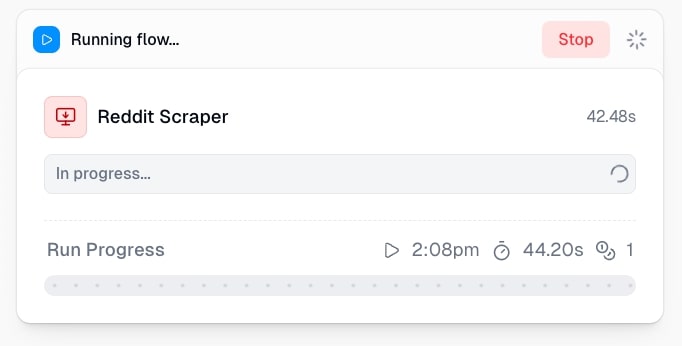

The time it takes to scrape all the data and organize it will depend on how complex our flow is and the AI model we used.

Our flow was not that complex to be honest. But I did use a more advanced premium LLM model, so it took a couple minutes to run. Here’s what my workflow is doing in real time:

- Scraping the top 100 posts in the SEO Subreddit

- Using ChatGPT 5 to analyze all of posts to find common pain points

- Giving us a list of 10 YouTube video ideas from those pain points

- Giving us 3 startup ideas for an SEO tool based on those paint points

- Writing all of this inside of a Google Doc we will receive

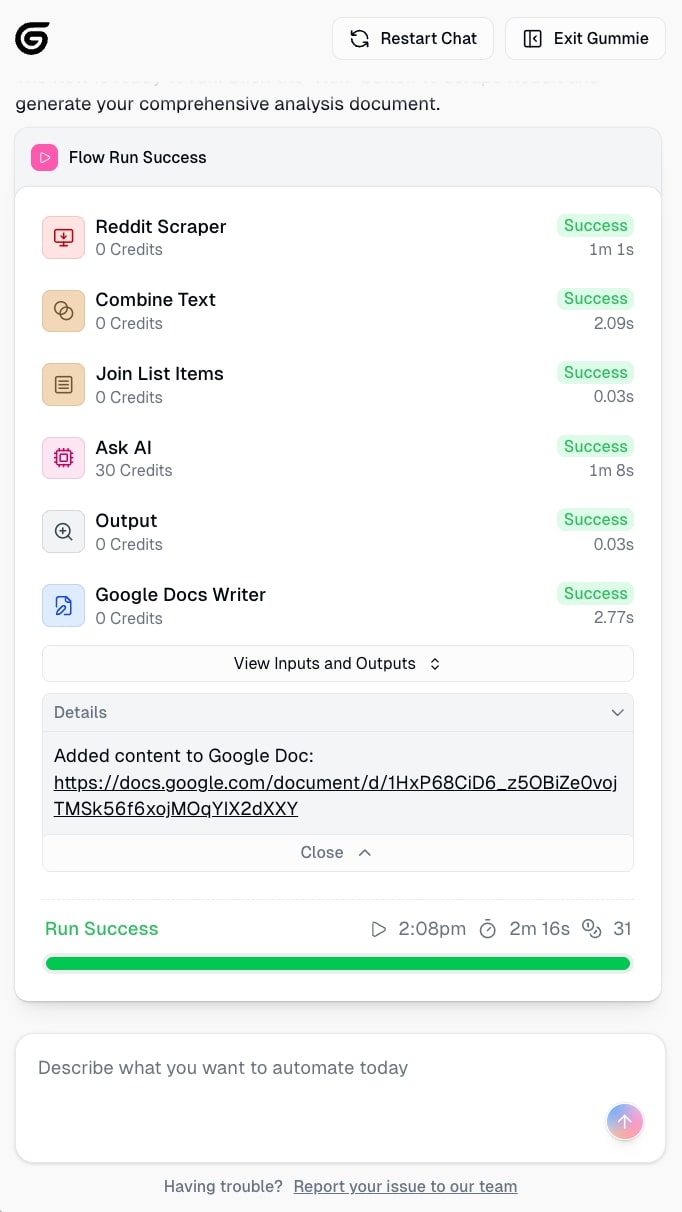

It’s a lot, and the flow was successful after just 2 minutes. What would have taken a few days (100 posts analyzed deeply!) was done in a couple minutes. Wild.

Now, we have a Google Doc link that should have everything we asked Gummie to scrape.

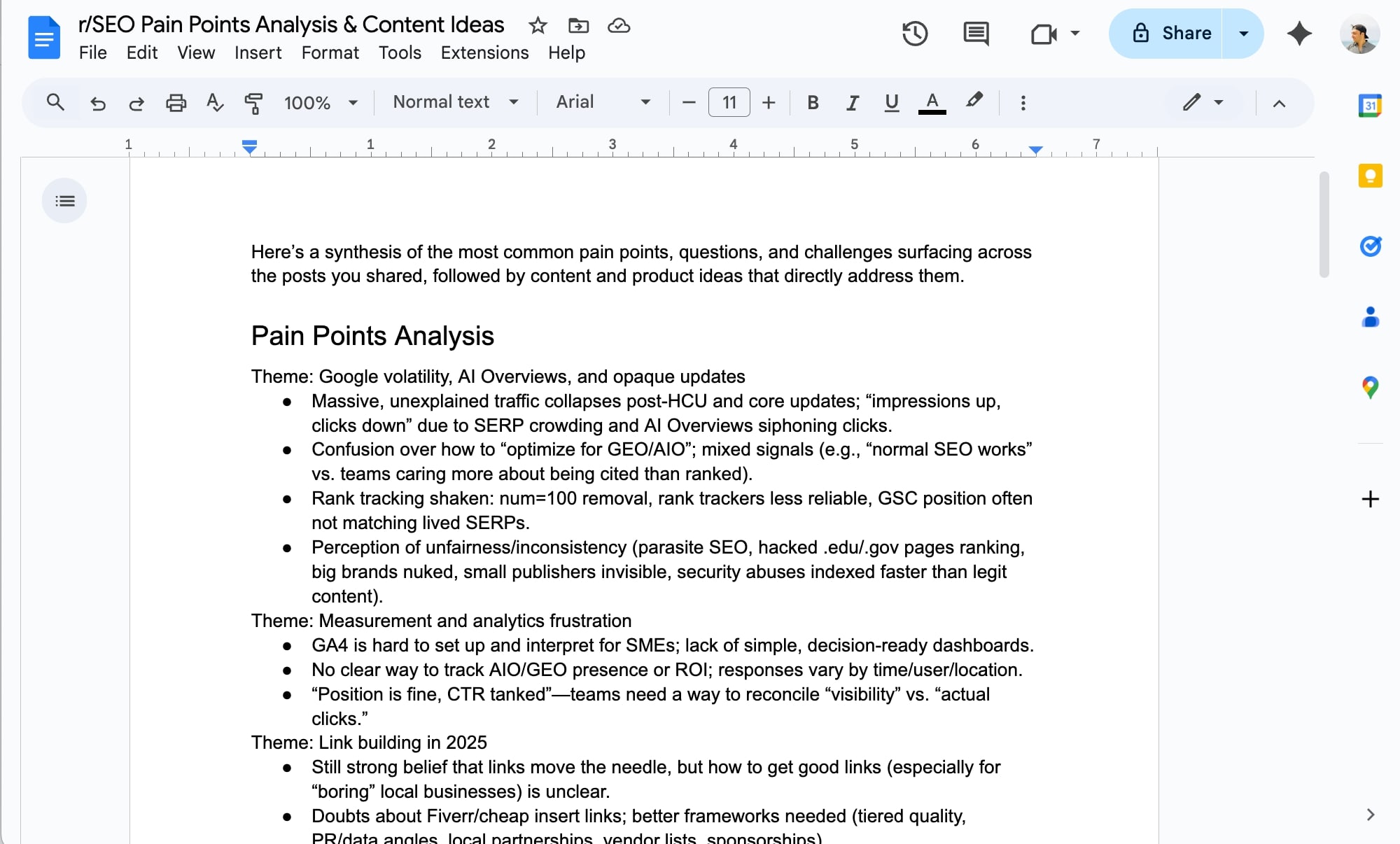

Clicking on the link, we have a full analysis on all the themes and pain points from the top posts in the Subreddit:

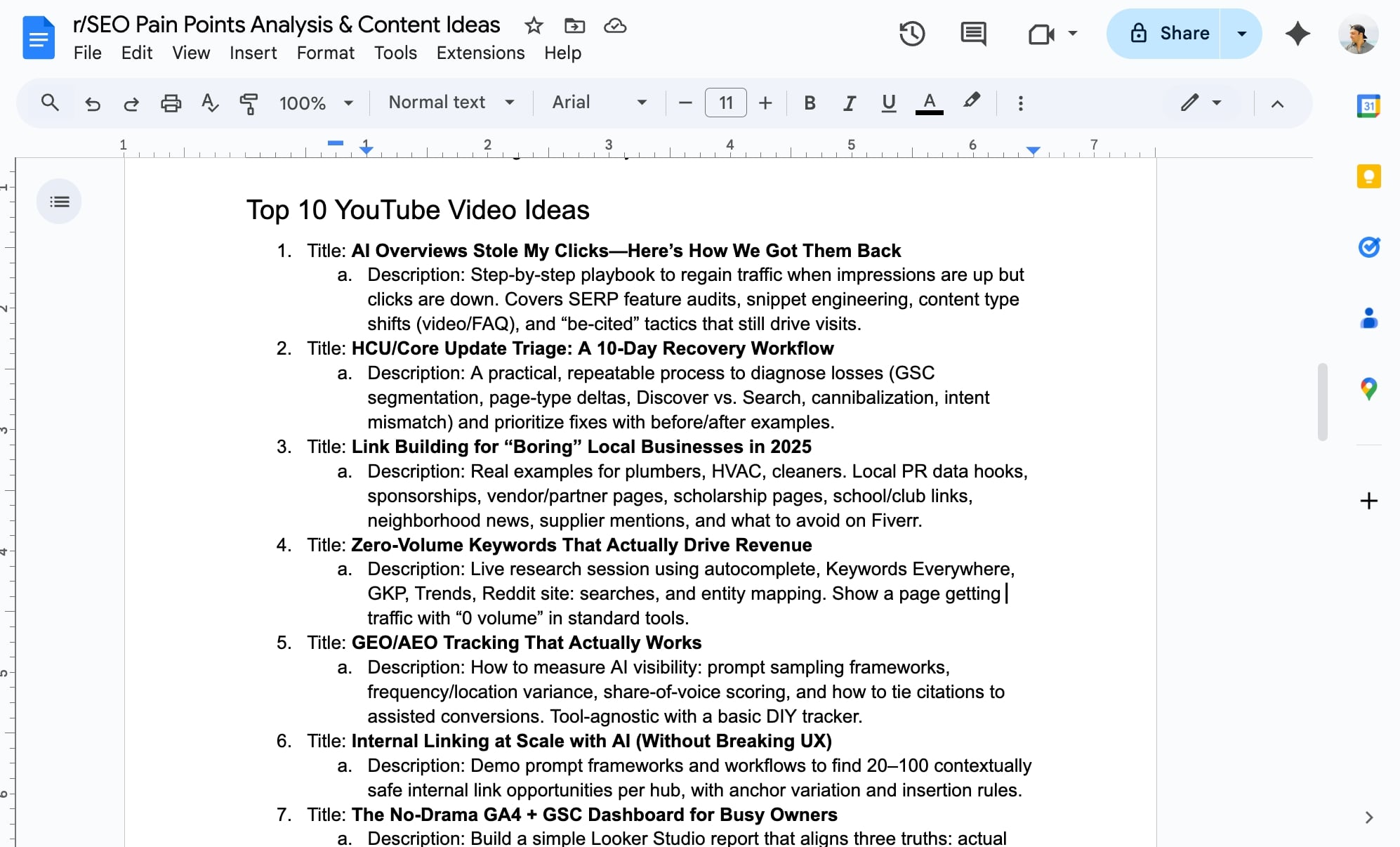

In the same document, we also have 10 YouTube video ideas (title + description) based on these paint points:

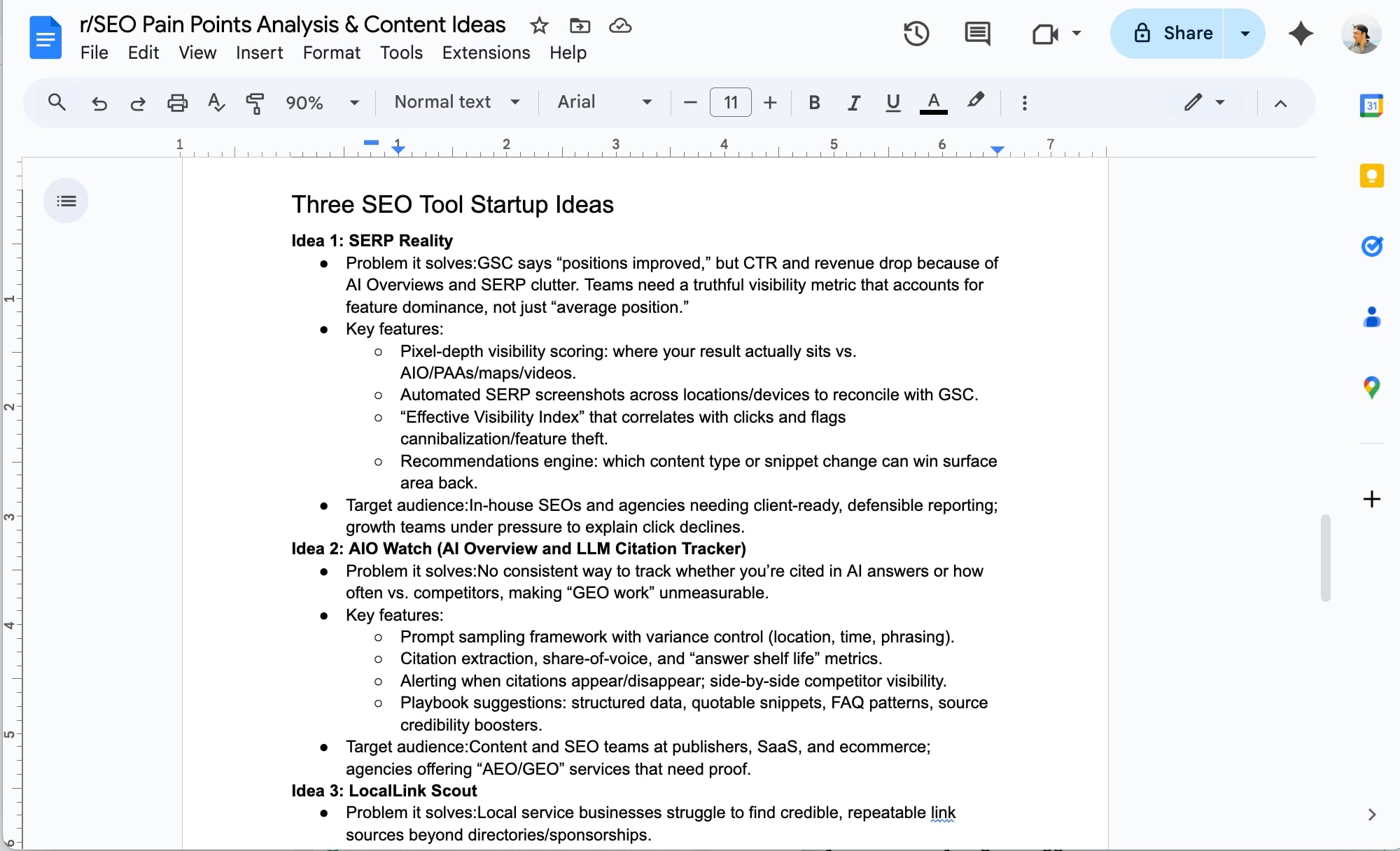

We also have 3 SEO tool ideas to build based on the pain points people actually have:

These can give us startup ideas that solve real problems people currently have, and aren’t just solutions in search of a problem.

And that’s it! Now you can go and create your own scraping workflow.

Just make sure that you follow rules and regulations and don’t scrape anything you legally can’t. Consult with your local laws. This post was for entertainment and educational purposes only, and I do not condone scraping what you’re not supposed to.

Automate more than just web scraping

And just like that, you learned how to scrape data from a website using AI. It's such an easy scraping method that any beginner could do it.

I hope by now you're just as excited as I am to go out and build these types of workflows. I only showed you scraping Reddit in this tutorial, but you can literally use this method for any website. Just follow the guided tutorial I linked out to in step 2. You can use it to scrape product pages, competitor sites, job boards, social media, you name it.

But also, know that web scraping is just the beginning. Once you have web data flowing into your workflows (no pun intended), you can do a lot more with it. You can use machine learning models to analyze patterns, transform it into JSON format for your apps, or build point-and-click automations that handle entire scraping projects from start to finish.

What I really love about Gumloop is that it’s a full automation platform that lets you connect AI to pretty much anything in your tech stack. You can build AI agents that handle research, draft emails, monitor competitors, generate reports, or automate repetitive tasks across your entire workflow. No browser extensions needed (although there is one), and no complicated setup.

The platform is flexible enough for solo operators like me who are just tinkering with automation ideas, but powerful enough that companies like Shopify, Webflow, and Instacart use it to scale their operations.

So if you're looking to automate more than just web scraping, Gumloop is worth checking out. You can start for free and see what kind of workflows you can build.

Happy automating!

Read related articles

Check out more articles on the Gumloop blog.

Create automations

you're proud of

Start automating for free in 30 seconds — then scale your

superhuman capabilities without limits.